架构为categraf向n9e-edge上报,所以 categraf的heartbeat 配置地址改为edge的19000端口,但报错:heartbeat.go:160: E! heartbeat status code: 404 response: 404 page not found

同时在n9e web界面看 主机状态全部为 unknown

最新版本categraf心跳上报404,主机状态全部unknown

Viewed 95

5 Answers

[global]

# whether print configs

print_configs = false

# add label(agent_hostname) to series

# "" -> auto detect hostname

# "xx" -> use specified string xx

# "$hostname" -> auto detect hostname

# "$ip" -> auto detect ip

# "$hostname-$ip" -> auto detect hostname and ip to replace the vars

hostname = "$ip"

# will not add label(agent_hostname) if true

omit_hostname = false

# # s | ms

# precision = "ms"

# global collect interval

interval = 30

# input provider settings; optional: local / http

providers = ["local"]

[global.labels]

# region = "shanghai"

# env = "localhost"

[log]

# file_name is the file to write logs to

file_name = "stdout"

# options below will not be work when file_name is stdout or stderr

# max_size is the maximum size in megabytes of the log file before it gets rotated. It defaults to 100 megabytes.

max_size = 100

# max_age is the maximum number of days to retain old log files based on the timestamp encoded in their filename.

max_age = 1

# max_backups is the maximum number of old log files to retain.

max_backups = 1

# local_time determines if the time used for formatting the timestamps in backup files is the computer's local time.

local_time = true

# Compress determines if the rotated log files should be compressed using gzip.

compress = false

[writer_opt]

batch = 1000

chan_size = 1000000

[[writers]]

url = "http://edgeserver:19000/prometheus/v1/write"

# Basic auth username

basic_auth_user = ""

# Basic auth password

basic_auth_pass = ""

## Optional headers

# headers = ["X-From", "categraf", "X-Xyz", "abc"]

# timeout settings, unit: ms

timeout = 5000

dial_timeout = 2500

max_idle_conns_per_host = 100

[http]

enable = false

address = ":9100"

print_access = false

run_mode = "release"

[ibex]

enable = false

## ibex flush interval

interval = "1000ms"

## n9e ibex server rpc address

servers = ["127.0.0.1:20090"]

## temp script dir

meta_dir = "./meta"

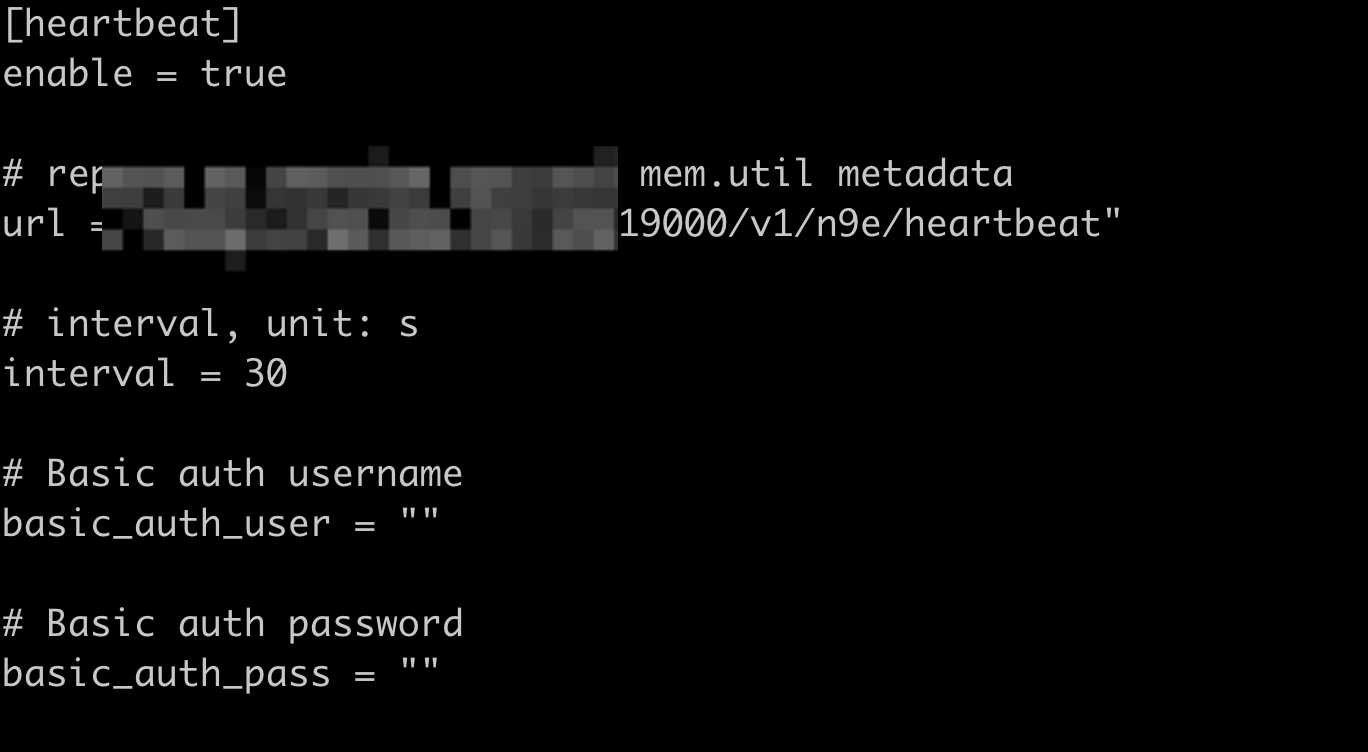

[heartbeat]

enable = true

# report os version cpu.util mem.util metadata

url = "http://edgeserver:19000/v1/n9e/heartbeat"

# interval, unit: s

interval = 30

# Basic auth username

basic_auth_user = ""

# Basic auth password

basic_auth_pass = ""

## Optional headers

# headers = ["X-From", "categraf", "X-Xyz", "abc"]

# timeout settings, unit: ms

timeout = 5000

dial_timeout = 2500

max_idle_conns_per_host = 100

[prometheus]

enable = false

scrape_config_file = "/path/to/in_cluster_scrape.yaml"

## log level, debug warn info error

log_level = "info"

## wal file storage path ,default ./data-agent

# wal_storage_path = "/path/to/storage"

## wal reserve time duration, default value is 2 hour

# wal_min_duration = 2

这里调整了么

这里调整了么